Deep learning is a subfield of machine learning and is the main reason behind the current hype around artificial intelligence. If you hear something about an amazing AI application performing an impressive feat, chances are that this system uses deep learning.

But in what way is this learning different from other machine learning approaches previously discussed in That’s AI? And why is it referred to as ‘deep’?

Going deeper than before!

To better understand what makes deep learning so special, we first need to look at how more ‘classical’ machine learning models (also called shallow models) learn from their data. Some examples of these shallow models are highlighted in the articles about Classification, Regression and Clustering.

In (very) short, a shallow model analyzes the data it gets in its current form. For example, if a ‘shallow AI’ receives information about the height and weight of a person, then it will use these two information points to perform its task. It will not try to combine this information and compute something new – like the body mass index (BMI) for example, which is the weight divided by the height squared.

If we are curious about why such an additional feature could be useful to a ‘shallow AI’, then we – as humans guiding the AI implementation – need to compute this new feature ourselves and add it to the input data. In other words, for shallow models to work optimally, there needs to be a human in the loop somewhere to curate and prepare the data for the training.

Deep learning models are capable of going a step further and are therefore much less dependent on this constraint. In contrast to shallow models, deep learning models can use the input data they get and transform and combine it in such a way that the task becomes easier. For example, if the computation of the BMI helps to classify sick people from healthy ones, then the ‘deep AI’ will compute this information. Or if the detection of vertical stripes helps to detect if an image shows a zebra or a horse, then the AI will train itself to also look for these vertical stripes.

The great thing about deep learning models is that they can undertake this detection of patterns and the generation of new insights multiple times in a row. So if a deep learning model is more effective in combining the information from the BMI with the zebra stripes, it will do so.

To put it another way, a deep learning model is capable of extracting relevant features for the task at hand, without the need of a human in a loop. Step by step, a deep learning model finds new ways to combine relevant information into new characteristics and delivers these insights to the following steps in the analysis. This multi-step approach, using multiple levels of data abstractions, is the reason why we call this kind of learning ‘deep’.

However, it’s important to highlight that the ‘deep AI’ does not look at the dataset and take the conscious decision of looking for this additional information. The fascinating aspect here is that “form follows function”. The way deep learning models are created enables them to look for all possible data patterns, allowing them to create the most optimal functioning deep learning model. The fact that the additional feature might represent the BMI or some zebra-like stripes is more of a secondary effect. This is the consequence of the input data and the fact that such characteristics help to differentiate between patient groups or animals.

Deep (artificial) neural networks

The reason why deep learning models are capable of achieving these results is that they use artificial neural networks – in particular, artificial neural networks with multiple layers. There are many different ways – so-called architectures – a deep neural network can be built up, but the function of this multi-layered structure is mostly the same.

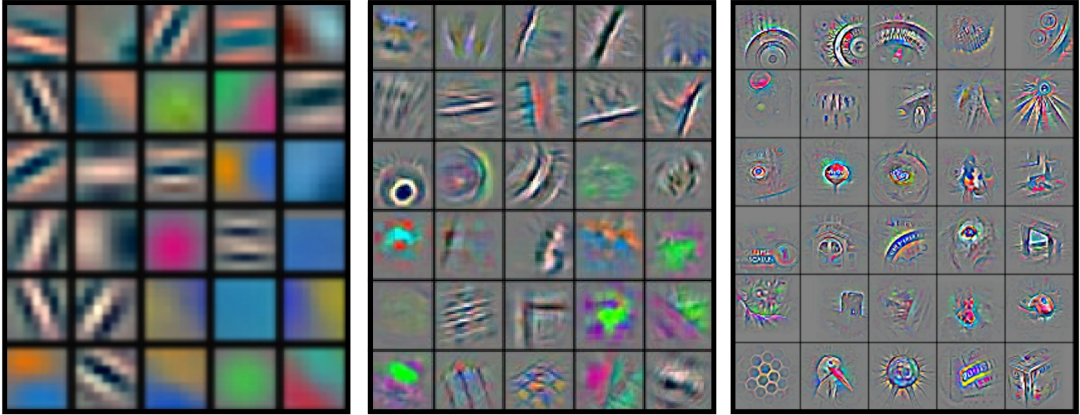

The first layers extract meaningful low-level features (e.g. finding horizontal or vertical lines in an image). The next layers might combine these insights into more mid-level features (e.g. are there squares, circles or zebra-like stripes in the image?) so that the last layers can combine these mid-level features into high-level features (e.g. detection of eyes, car wheels, etc.).

For the particular example shown in the figure above, the architecture type of such a neural network capable of vision is called a convolutional neural network. However, convolutional neural networks are not restricted to the vision domain; they can also be used for natural language processing, speech recognition, or time series forecasting, amongst others. Additionally, next to convolutional neural networks, there exists many other architecture types, including recurrent neural networks or deep reinforcement learning.

In a deep neural network, each layer allows the AI to transform the data in a new way and extract new additional important characteristics from it. To iterate the point once more, because of this multi-step or multi-layered learning approach, such AI models are called deep because they use deep neural networks to train. Hence the reason for calling this kind of learning deep learning.

Why now and not before?

In a previous article about Artificial Neural Networks we learned how these kinds of models are inspired by the way our brain works. While the theory behind such networks will soon be a century old,1 deep neural networks only entered our general consciousness in the early 2010s. The reason for this is due to the following three building blocks.

Data

The main commodity to train any AI model is data! Without enough data, the AI works in a mediocre fashion at best – but with enough data, the AI can do amazing things, such as drive a car, detect cancer, recognize voices and faces, write text, and even speak. However, in the case of deep learning, the amount of data needed to train it is huge – magnitudes bigger than what is required for ‘classical’ machine learning models.

Only since the arrival of big data has it been possible to sufficiently train neural networks. While some models are able to use a few thousand samples to train a simple deep learning model, the more complex the task at hand, the more data that is required.

For example, current state-of-the-art AI models capable of reading or writing text sometimes look at the full corpus of available texts in this language, e.g. combining all Wikipedia articles, publicly available books, and blog posts from social media together. Similarly, if we want to train a sufficiently useful AI model that can interpret images from around the world, we would need millions, if not billions, of images to train them.

Computing power

Another reason for the late arrival of deep learning is the staggering computational demand needed to train deep learning models. Analyzing these huge amounts of data also means that we need very powerful computers to execute the training.

Due to many different technological advancements, computers finally became powerful enough to train such deep learning models in recent years. For example, the introduction of multi-core computer chips paved the way for parallel computing, speeding up computation by a factor of two and more. This advancement was further helped by the realization that graphics cards, initially intended for video games, are perfectly set up to perform high-demand computations for deep learning.

While these innovations made the training of deep learning models possible for researchers and most people with a reasonably new computer, the true universal access to deep learning was achieved when big tech companies such as Google, Amazon and Microsoft provided the computational power of their supercomputers as a cloud service to everybody. This allowed many people around the world to explore and learn about deep learning and begin their journey with this new technology.

However, it is important to keep in mind that even with all of these advancements in technology and the availability of big data, the state-of-the-art deep learning models that we hear about in the news still take months to train on thousands of different computers, costing millions of dollars.

Human expertise

Last but not least, the reason why we are only recently hearing about deep learning is because of the theoretical work that had to be done first by researchers all around the world. While crucial mathematical concepts like ‘backpropagation’ have already existed for a few decades, many other routines first had to be established then, just as importantly, put into user-friendly computer programs so that everybody could profit from this new technology, rather than just a few researchers.

In the early 2000s, all of these important puzzle pieces – data, computing power, human expertise – came together. But it wasn’t until the 2010s that deep learning hit the big stage and everybody became aware of it.

What deep learning is capable of

At the beginning of the 2010s, deep learning was only capable of detecting cats in YouTube videos. At the time, this was an amazing achievement, hinting at the potential of this new kind of AI. But since these first initial baby steps, the research field around deep learning has moved forward in big strides, and many more useful and interesting AI applications have emerged.

The following list shows only a handful of examples where AI models perform impressive tasks by using deep learning. There are of course many more, and every year new and fascinating applications are showcased, opening the door to even more possibilities and potential wonders.

Computer vision

In the field of computer vision, deep learning models are capable of identifying objects in images – like identifying a pedestrian in front of a car, ‘recognizing’ your particular face in front of your smartphone, or detecting cancerous tissues in medical images.

Even more fascinatingly (and equally frightening), some deep learning models are capable of creating realistic-looking photos and videos of people that don’t exist (also known as deepfakes).

Natural language processing

In the field of natural language processing (NLP), deep learning models have learned to ‘understand’ text. They are able to translate text from one language into hundreds of others, or summarize lengthy texts into just a few paragraphs – most of the time as well as, or even better than, humans.

Language tools like Google Translate have become such a second nature to most of us that we forget what an astonishing feat it really is. Suddenly, content from other countries and cultures has come into reach, and language borders have been reduced significantly.

Speech recognition

In the domain of speech recognition, deep learning models have learned to understand human speech – so much so that talking to Apple’s Siri, Amazon’s Alexa or Google’s Assistant seems completely normal now. But understanding is only the first step; the second is the creation of speech.

Auto-generated voices, such as the ones from Siri and Alexa, sound more life-like and human with every passing year. From these achievements, it is only a small step for the AI to recognize your emotional state while talking, and identify gender and age-specific characteristics. Meaning that, in time, you can create an artificial audio recording in any language, with any annotation of gender, age and emotional tonation.

Learning to take decisions

Thanks to the combination of reinforcement learning and deep learning, the tech company DeepMind was able to create powerful AI models, capable of playing board and video games on a superhuman level, often beating the world champions of these games. Using this kind of approach, DeepMind was also able to solve a 50-year-old problem in biology, providing researchers and industry with an indispensable new tool that was previously thought of as either impossible or far out of reach.

Recommender systems

Deep learning is also behind the most successful recommender systems on platforms such as Netflix, Amazon, Reddit, Facebook and more. If a computer recommends content or a product to you, it has most likely come from a deep learning model.

Autonomous vehicles

Deep learning is also behind the most recent achievements with self-driving vehicles. While many of us might still be sceptical towards the capability of this new technology, we should keep in mind that only a few years ago we thought the same of language translation, speech synthesis and image manipulation. And yet today we have top translation programs, talk to our smartphones (which unlock themselves by recognizing our faces) and listen to audiobooks without realizing that they weren’t read by a human.

While the journey for fully autonomous transportation might still take some time (mostly due to regulations and missing trust), it should be evident that this new technology will eventually arrive – and once here, it will disrupt and change the way we drive our cars, transport our goods, and travel.

Is deep learning the solution to everything?

While deep learning has a lot of potential – with many more amazing things becoming possible once time has brought us even more data, more computational power and more scientific breakthroughs – it is certainly not the answer to everything!

Although alluring, even today deep learning is not always the best AI to solve a given problem. First, problems still exist where deep learning models cannot be applied. But perhaps more importantly, there are still a wide range of problems where ‘classical’ machine learning models (i.e. shallow models) are much better suited to the task than deep ones.

For some problems, classical models can be trained much quicker, cheaper and with less data. Trying to solve these kinds of problems with deep learning can instead result in a loss of both time and money. It’s like renting a refrigerated truck to do your groceries: sure you have more space, can better package bought goods, and they will stay fresh until you reach your home – but it might nonetheless be overkill if you just want to buy things for tonight’s dinner. Going to the shop on your bike will do just as well.

However, in the cases where it makes sense to use deep learning, it is an extremely powerful tool. And what is clear is that this is only the beginning of deep learning: the next decade will reveal what deep learning is truly capable of.

Even for those of us who are fully enthralled by this new AI approach, it’s not yet clear how far deep learning can bring us on the journey to achieve general artificial intelligence. But for now at least, it is our constant companion in this endeavor, and seemingly our best shot to reach the dream of general AI.

-

Thanks to the impressive work of Santiago Ramón y Cajal, we know about the neuron and its functions in our brain, and in the 1940s the concept of an artificial neural network was suggested by a group of researchers. ↩