Computer vision is a field of artificial intelligence concerned with giving machines the ability to perceive and understand the visual world, hence the name. More precisely, it’s about creating AIs capable of deriving meaningful information from images and videos which can then be applied to better understand, manipulate or even generate new visual media.

Vision is one of a human’s most fundamental abilities, yet teaching a machine to do the same is notoriously difficult. For us, ‘an image can speak a thousand words’, but a machine trying to understand what colored pixels on a screen might represent is hard. Very hard!

For more than half a century, researchers have taken the insights from biology about how human vision works and tried to incorporate these principles into machines. Unfortunately, in comparison to humans, the results were mediocre at best. That was until 2012, when a research team from Toronto was able to train an artificial neural network to recognize cats in YouTube videos. While this might not sound like much, it was this breakthrough that demonstrated the potential of neural networks and launched the current hype around AI and Deep Learning.

Ten years later, together with Natural Language Processing, computer vision is one of the most researched AI domains, with many different applications already present in our daily lives. It is thanks to computer vision that smartphones can read QR codes and use face detection to be unlocked. Computer vision allows cars to drive (almost) autonomously on the road. However, it is also this technology that is responsible for the infamous deepfakes.

So how does computer vision work? How were we able to finally crack this toughest of nuts?

How does computer vision work?

In the article Deep Learning, we discovered that the strength of deep neural networks lies in their ability to extract meaningful patterns from data, without any human intervention. To put this into context, if a ‘deep’ AI is tasked with classifying images of different animals, it will train itself to look for particular characteristics that help achieve this distinction between animals. Without any human intervention, the ‘deep’ AI might train itself to look for vertical stripes, the presence of feathers, whether or not they are blue or green, if the image contains a furry tail, and so forth. Depending on the data and the task at hand, the AI will learn what kind of features are important and helpful – and will ignore the ones that are not.

Convolutional neural networks

The true breakthrough for computer vision is due to convolutional neural networks. These are special types of deep neural networks capable of looking at a region of an image (in contrast to just individual pixels) and extract meaningful information from it. For example, does this region contain horizontal or vertical stripes, different sizes of dots, or is a particular color?

This might not sound like much, but it is exactly how the human brain makes sense of our visual world as well! Before convolutional neural networks, analyzing a specific region of an image and not just an individual pixel was very difficult.

As the name implies, the main trick behind these vision-capable AI models are convolutions. ‘Convolution’ has different meanings in different scientific domains, but in the world of AI, convolutions are unique regional information patterns that occur in the data that helps to perform the AI task.

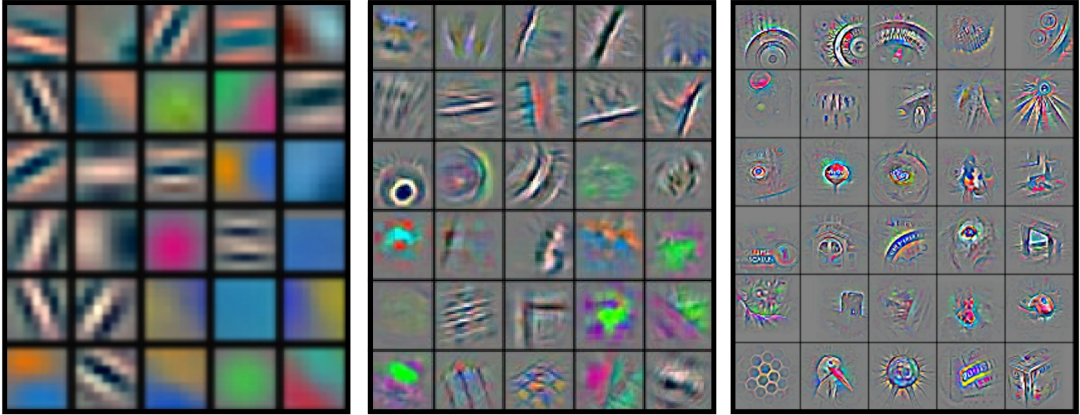

In computer vision, where the data is usually two-dimensional, a convolution could for example be a particular 2D pattern in the data (e.g. a straight line in a particular direction), as shown in the following figure on the left. A deep neural network could now learn in the first layer of its network to look for 30 different and useful convolutions (i.e. visual patterns), and extract how often they appear and roughly where. In other words, in the first convolutional layer, such a model could teach itself what kind of low-level features to look for.

In a second convolutional layer, the model could then try to combine the information from all of these visual patterns and try to identify some more nuanced characteristics, as shown in the middle of the figure. Combining this information once more, the model could eventually merge this more nuanced information and create even more detailed convolutions that are able to detect if the original image contained wheels, honeycombs, and more.

This kind of approach is more or less how our brain carries out this task. First, low-level features like shapes and forms are extracted from the visual input. Then this information is combined into mid-level features, such as colors and motion, which eventually allows the brain to detect high-level features like faces or animals.

Visual embedding

In the previous example, it is easy to understand how an AI can eventually learn to identify a zebra, some honeycomb, or a bicycle by developing a convolution for vertical stripes, hexagonal shapes, and round wheels. But learning an individual high-level convolution for each object that exists would be very inefficient.

That’s why, similar to the word embedding approach from NLP models, the main goal of computer vision models is to take a visual input and embed it in a high-dimensional space. Meaning it’s not about knowing that an image clearly contains honeycombs or car wheels, but more so about finding an embedding space where each dimension is a useful visual characteristic.

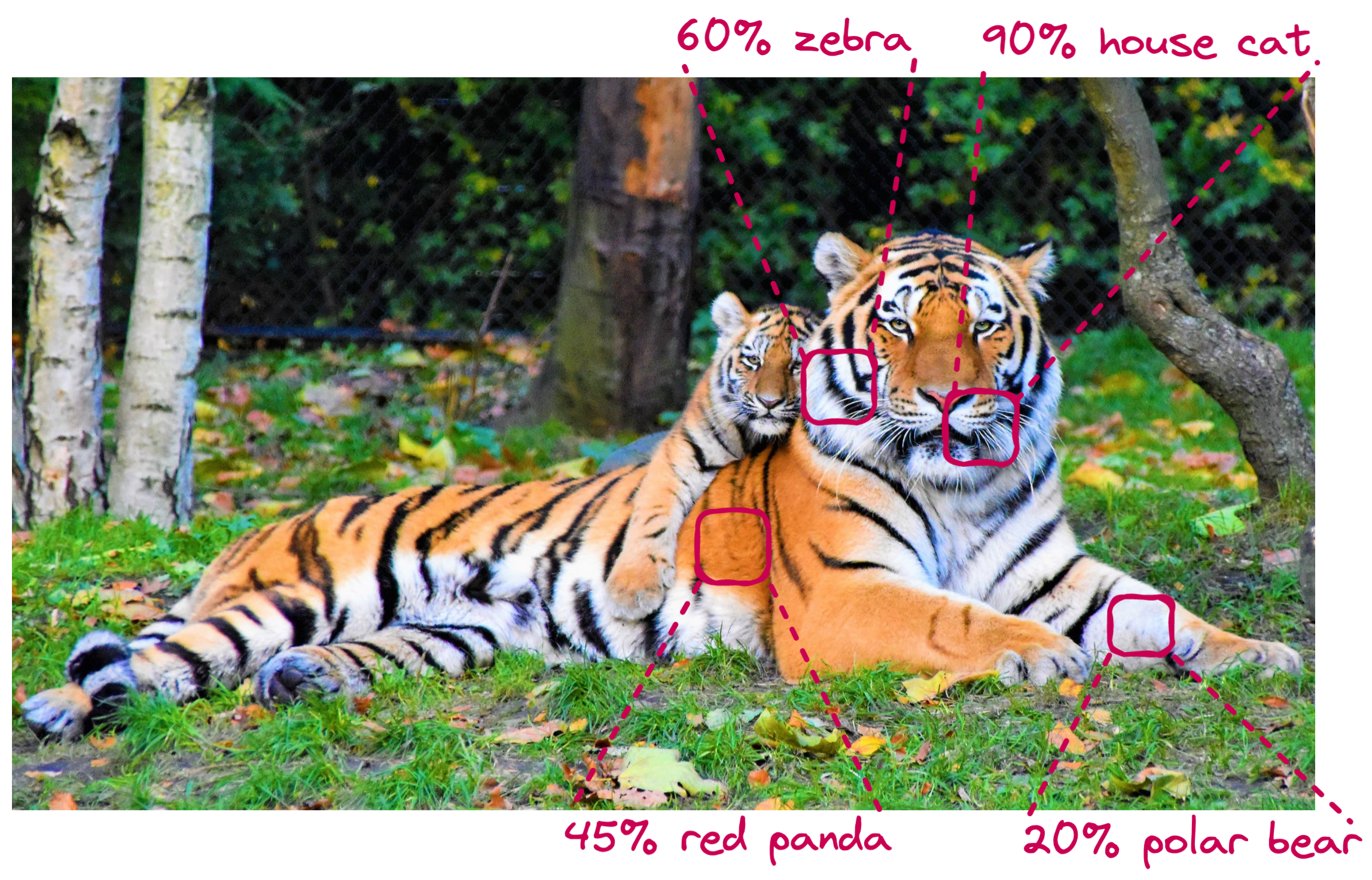

Ultimately, it’s about detecting patterns and characteristics in the dataset that can then be used to sufficiently characterize any image. For example, an image of a tiger could be embedded as being 60% zebra, 90% house cat, 20% polar bear and 45% red panda.

So instead of giving an image one clear characteristic (e.g. ‘it has features of a tiger’), the AI can use multiple, more generic characteristics (‘it looks orange and white, with stripes, is cat-like with big feet’) to describe the image.

Using such an approach, computer vision models are capable of describing almost any image sufficiently well in just a few thousand features. Do note, however, that these few thousand features might not always be understood by humans – but they allow a machine to understand the visual content and represent an image in a compact, high-dimensional space. To learn more about high-dimensional space and embedding, please read the NLP and Dimensionality Reduction articles.

While this kind of neural network architecture was the main reason behind the new hype for deep neural networks, convolutional neural networks are not the only ones capable of extracting meaningful information from visual data. Just recently, the computer vision field was introduced to Transformer models, another type of neural network architecture stemming from the NLP domain. How exactly these Transformer models work, and in what way they ‘see’ the world differently to convolutional neural networks, is the subject of heated debate between researchers and is outside of the scope of this article. But what is clear is that the domain of computer vision is ever evolving and new neural network architectures are constantly being developed.

What kind of tasks can computer vision carry out?

Throughout the process described above, an AI can learn to disassemble images and videos in such a way that meaningful information can be extracted. From this meaningful information, another AI (or the same) can then be trained to perform a multitude of different tasks. Let’s take a look at a few of them.

Image classification

The goal of image classification is to identify if a particular object is present in an image or not. For example, does an image contain a dog or a cat? Does an X-ray show a cancer, lesion, or nothing? Does your photo on social media also show your friend Arnaud as well? Is this photograph from a journalist real or has it been Photoshopped?

Object detection

Object detection goes one step further than simple image classification by also being able to say where in an image the object of interest is. For example, when an autonomous vehicle is able to say where in the image a pedestrian is positioned, detecting damaged products on an assembly line, or helping a face detection algorithm to find faces in a photo.

Object detection allows the AI to quickly identify a particular object and cut this section out so that it can be used for further analysis. This helps to reduce the computational load during an AI’s task as, for example, a face detection AI only needs to look for nose, eyes and ears in the region of an image where a face was detected.

Image segmentation (semantic segmentation)

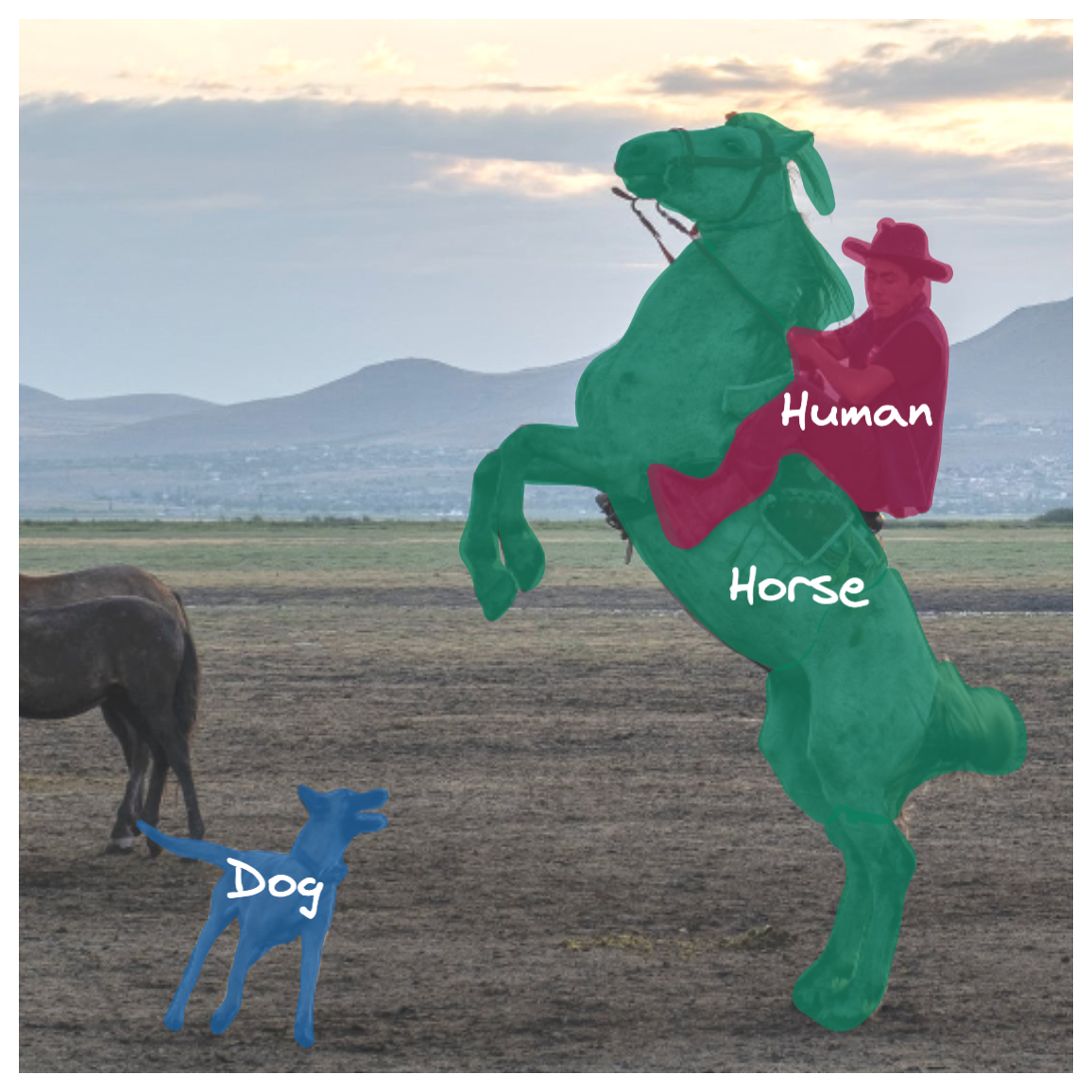

While object detection is capable of identifying where in the image a particular object type can be found, image segmentation (also called semantic segmentation) can identify which part of the image belong to a particular object.

As an example, knowing the exact extent and shape of an object can be used in the medical domain to quickly assess the spatial properties of bacteria or blood cells, or help autonomous vehicles to better interpret traffic signs.

Object tracking

Applying object detection on multiple images in a row allows an AI to track objects in a video. This can be used in autonomous cars to track and predict the path of surrounding cars, bikes and pedestrians. In the context of COVID-19 health measurements, such an approach could also be used to track people in public spaces and ensure they keep a minimum amount of social distance.

Content-based image retrieval

Knowing if a particular object is present in an image is already a huge achievement. Content-based image retrieval goes one step further by giving the image text labels, which allows us to search and retrieve particular images from huge datasets.

It is this kind of AI model that allows us to search through all of our holiday videos and quickly find the exact time point where a “fish jumped onto our kayak while we passed below a tall bridge”. It also allows us to search online for ‘photos of lakes with lots of trees but no tourists in them’.

Image processing

In contrast to the passive role of AI in the previous examples, computer vision models can also be used to manipulate and transform the content of images and videos. Just like a human skilled in Photoshop, an AI can be trained to remove noise from images, correct marks from the aging of old pictures, remove motion blur from videos, or colorize black and white pictures to name just a few applications.

AI can even be trained to enhance an image to a higher resolution than it was recorded in – something called ‘super resolution’. Similarly, AI can also be used to restore lost parts of an image or remove unwanted objects from pictures, such as tourists or buildings.

Image generation

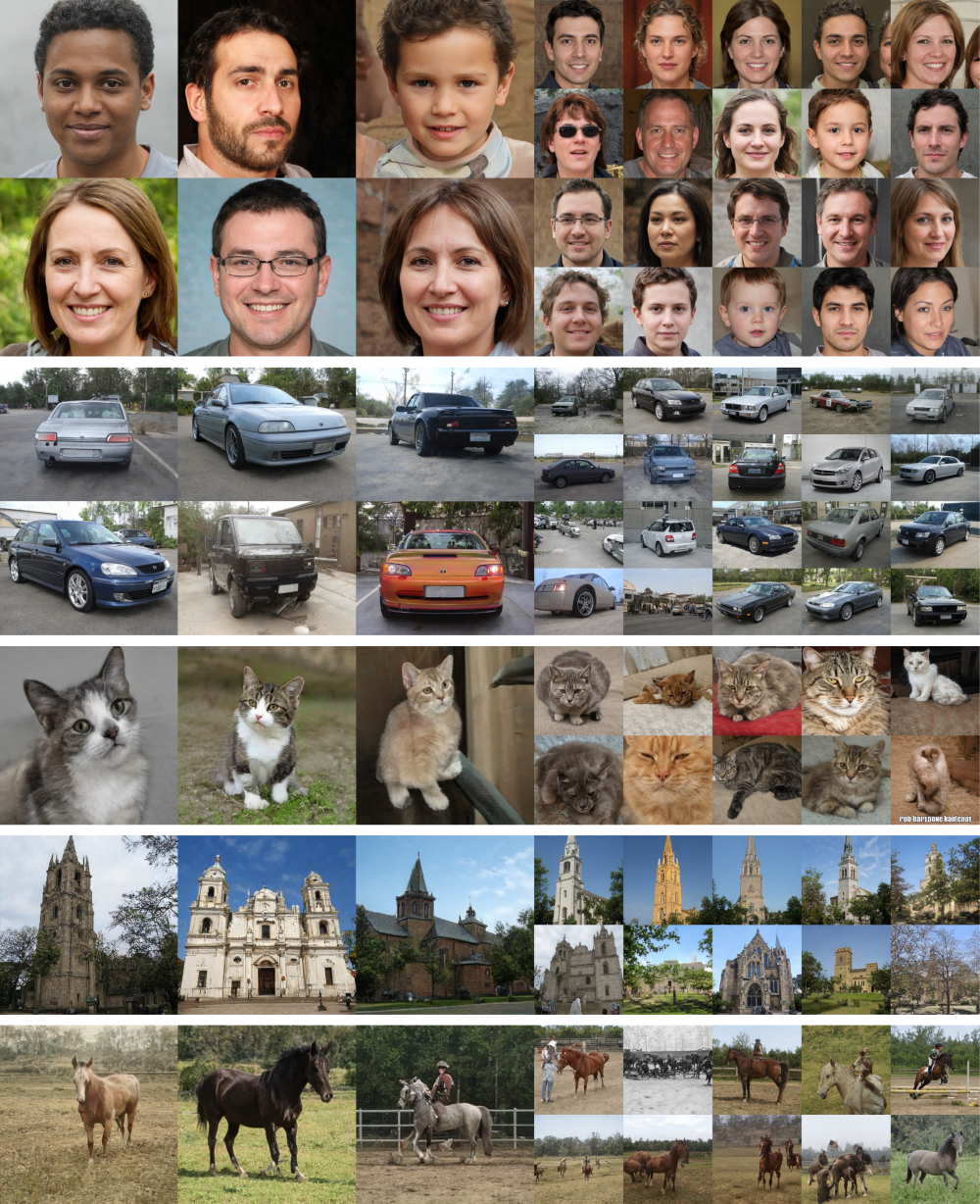

Probably one of the most exciting – but equally horrifying – new AI capabilities is the artificial creation of realistic-looking images. This new technology will equally provide us with lots of joy and power to express ourselves, but will also further challenge the way we interpret digital visual media.

While the creation of deepfakes might be the most well-known example, image generation goes much further and allows the creation of seemingly any kind of realistic-looking images, as the following collage of artificial images can show.

But the generation of fake images isn’t just about generating new random objects from scratch. Computer vision AIs are also capable of understanding human language to manipulate the content in an image in a very specific, but equally authentic and realistic-looking way. Let’s look at two famous examples.

OpenAI’s DALL·E, named after famous artist Salvador Dalí and Pixar’s WALL·E, is capable of generating new images on text command alone. The following examples show four drawing commands: (left) a tapir made of accordion; (middle-left) illustration of a baby hedgehog in a Christmas sweater walking a dog; (middle-right) a neon sign that reads “backprop”; (right) the exact same cat on the top as a sketch on the bottom.

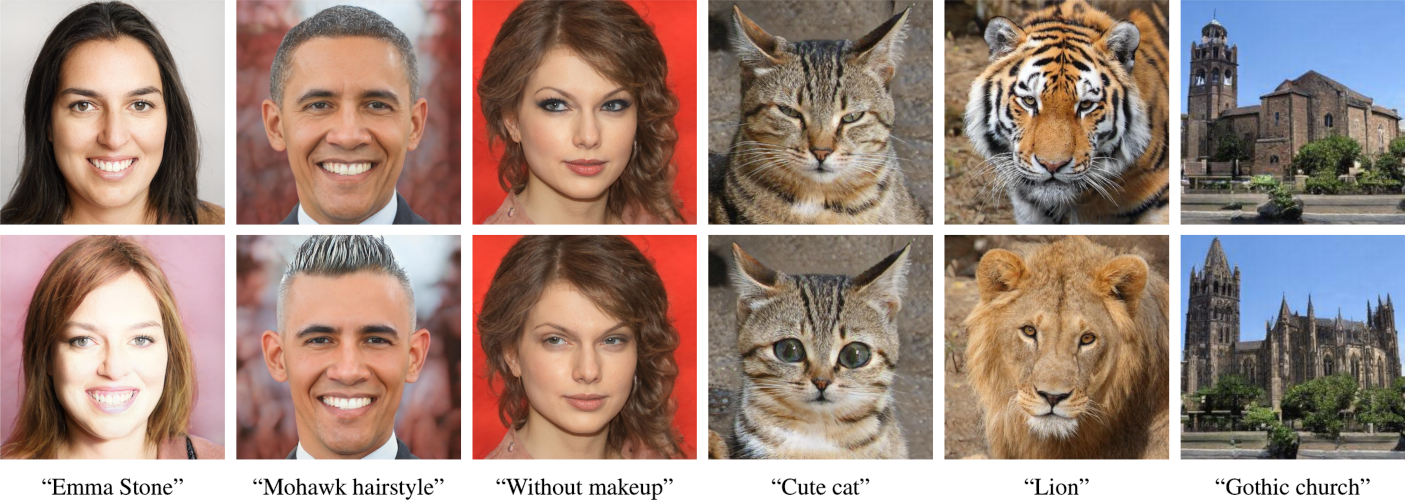

Whereas DALL·E creates new images out of thin air, StyleCLIP takes already existing images and manipulates them according to text-based instructions. In the following illustration, you can see the original image in the top row and the AI-manipulated image in the bottom row based on the text instructions underneath.

It is important to remember that all of these images (the ones from DALL·E and StyleCLIP) were generated by the AI alone – no additional human intervention took place. By looking at 400 million text-image pairs, these AIs were able to untangle the intricacies between the description and the content of the image and learned how something needs to look to satisfy the description.

Application of computer vision

Together with NLP, computer vision is among the hottest topics in AI research, with multiple new applications being developed every month. To do this versatile domain justice, we will explore a few more examples – keep in mind that these are all applications that are already in use.

Manufacturing

In the world of automated manufacturing, computer vision models can be used to reduce the amount of human intervention to a minimum. In a rice processing line for example, an AI can quickly identify bad grains and initiate their removal. Or in a processing line producing bottled drinks, a computer vision model can swiftly detect whether a bottle is fully closed and otherwise remove the faulty product.

Computer vision can also be used to improve the robots working in factories. Through better assessment of an object’s position, shape and orientation, a robot arm is much more likely to pick up the object in an optimal way.

Meanwhile, at the end of the manufacturing process, computer vision AI can inspect the final product and swiftly identify defects by looking for damage or comparing the final output against a predefined set of quality standards – all without human intervention.

Agriculture

In the domain of agriculture, computer vision AIs can be used in a diverse number of settings. Farmers can use drone footage to assess the state of their fields with regards to disease detection or deficit in nutrition or water. The footage from these drone flights can then be used to identify the ideal harvesting time and predict the most likely yield. Furthermore, the combination of computer vision with autonomous vehicles can lead to fully automated harvesting.

However, AI is not just for the harvest – computer vision can also be used to monitor the health of livestock. Using video surveillance, computer vision AI can be used to detect diseases, report unusual behaviors, or indicate if an animal is giving birth. Furthermore, such AI can also be used to support a farmer by counting the livestock and verifying that the animals have sufficient access to food and water.

Healthcare

In the domain of medical care, computer vision can be used to help specialists analyze CT, X-ray and MRI images to quickly localize diseases or problematic regions. Furthermore, computer vision AI can be used to prepare a multitude of such medical images and provide useful information (e.g. size and shape of organs, segmentation of different tissue types, and 3D model reconstruction). While such AI might not yet be able to replace experienced medical personnel, they are certainly capable of speeding up processes to allow users to make smart and informed decisions.

In a more personal fashion, AI systems can use photos from your smartphone camera to detect if an anomaly on your skin might be melanoma skin cancer, even if it’s still in the early stages. Furthermore, researchers are currently developing AI systems capable of predicting the full content – plus estimated caloric intake of your food and beverages – by analyzing just a single photo of your dinner plate, thus creating awareness and potentially helping with better diet choices.

Computer vision can also be used to improve the lives of visually impaired people by supporting them with their everyday tasks, the navigation of the world, communication with others, and perceiving visual information in a non-visual way.

Retail

In the world of retail, computer vision can be used to help the consumer as well as the seller. Using smart mirrors in shops (or just simply smartphone apps), clothing stores can combine computer vision with augmented reality to show what you would look like if you bought a new clothing item, without the need to actually try it on.

In retail stores, an AI can analyze videos in real-time and scan for suspicious or problematic activities, such as theft, physical assault or accidents. By informing the store owner and staff instantly, measures can be taken swiftly, thus helping to ensure the safety of everyone involved.

Meanwhile in supermarkets, computer vision can be used to quickly track the full inventory on shelves and identify if a product needs to be replenished, thus helping staff with inventory management and identification of sale candidates. Furthermore, by analyzing foot traffic and customer counts, AIs can gather all the information needed to better understand customer behavior and ensure safety regulations, such as maximum occupancy. The same approach can also be used to estimate waiting times in queues and inform customers accordingly.

Challenges with computer vision

Computer vision has the same challenges as many other AI domains: huge amounts of data and computers with mind-boggling computation power need to be run for months on thousands of machines to achieve top AI models capable of autonomous driving or generating new images based on language commands alone. Researchers are working to come up with new neural network architectures and techniques to reduce the number of images required to train such computer vision models.

And even if we have enough images and all the computation power required, the results might still be insufficient for some computer vision applications. This is due to the fact that our physical world is not always ‘black or white’ – visual input can be very ambiguous. Due to unfavorable light conditions or viewing angles, objects might not always be what they seem. A computer vision AI can only learn from the visual inputs it receives, along with labels and text descriptions that humans provide. As long as the AI is still dependent on our supervision, it will also inherit our flaws and misconceptions during this process.

While computer vision AIs are already impressive in recognizing objects in images, they are still flawed. The AI doesn’t really understand what the object really represents as humans do. The only thing the AI does is analyze the patterns in the image and determine that the thing in the image looks more like object A than objects B, C or D, etc. This makes this process vulnerable to something called ‘adversarial attacks’.

Adversarial attacks can be used to make an AI believe that it perceives object B, even though it looked at object A. By knowing what features the AI model is looking for to identify object B, a malicious person can add very small patterns of this object to an image of object A, thus fooling the AI into thinking that it looks like object B. The important nuance here is that the small patterns added to the image of object A don’t need to be too striking, they just need to be enough to make the AI’s decision flip to the other object.

Such adversarial attacks are not exclusive to computer vision and can take place in almost all fields of AI, but they are particularly worrying if we think about the implications they could have for computer vision. In the case of self-driving cars for example, an attacker could change the appearance of a stop sign just slightly enough to make it appear to the car to be a speed sign for 100 km/h.

Summary

Computer vision is a fascinating and quickly evolving field of AI. New applications are developed every month, many of them finding their way into our daily lives, often without us even noticing them. While things like autonomous cars and a face detection app might be known by most of us, many other computer vision AI applications are also already available.

For example, if you need a fake face for your marketing campaign, need to upscale a low-resolution image, remove objects from your holiday photos, or change your appearance in photos, all of this is possible with the right application.

Knowing more about the many different types of computer vision models and what kind of applications already exist will help you to better judge both the potential and risks of this new technology.