How AI Imitates Humans – and Excels Beyond Them

In our previous article, we looked at how artificial intelligence (AI) systems are able to use datasets to accurately predict human features, such as weight or height. Now that we understand a little more about how AI functions and how it differs from plain, old-fashioned software, we can ask ourselves this question: why do we call such systems artificial intelligence?

We might start by considering what makes humans – and to a certain extent animals – intelligent. Living things have the ability to acquire knowledge and skills, and then apply them in lots of different ways.

Humans and animals are…

beings which adapt their behavior based on experiences.

AI systems are doing something very similar; they acquire and master new skills and abilities by looking at data and using it to develop new ways of completing tasks. We could refer to them as…

computer systems which adapt their behavior based on data.

But this is quite a mouthful, even though it’s an accurate description.

So for simplicity we use the term “artificial” because we’re talking about computer systems and algorithms, and “intelligence” because these systems adapt their behavior based on data, just like living things.

Humans and AI systems approach learning in different ways, but there are a remarkable number of parallels in the way each system develops and then applies intelligence to achieve goals. To uncover these parallels, we’ll take a closer look at three of the dominant fields in AI research:

- How AI learns how to read and write.

- How AI learns how to see and draw.

- How AI learns to learn, decide, fail, and learn some more.

How AI learns how to read and write.

The ability to communicate is one of the main features of human intelligence. Being able to transmit our thoughts, intentions, and ideas in the form of text and speech is the major differentiator between us and the animal kingdom. Even so, understanding what another person is saying can be tricky – especially if they’re speaking in another language.

Early computer programs assisted us with communication by making our text easier to read and correcting our spelling and grammatical mistakes. More recently, we’ve seen programs that can translate our text from one language to another. The early versions of translation software were quite basic and tended to be fun, rather than actually useful. They would look through two sets of vocabulary and perform an almost straightforward word for word translation.

The English "Hello, welcome to That's AI",

became the German "Hallo, willkommen zu Das ist KI".

This was a good starting point, but these simple programs failed to understand context, nuance, or underlying meanings in the text. Sometimes a simple word for word translation yields a nonsensical result, like this amusing French to English example:

The French "Mon avocat est périmé",

referring to the avocado fruit,

became the English "My lawyer has expired".

As you may remember from the previous article, computer programs do not need AI technology to perform word by word spelling checks or translation; no data is analyzed and no information patterns are explored.

AI researchers have set out to improve these basic tools with the latest AI technology, hoping to create systems that will be able to help humans read, write and talk. The AI research field concerned with this topic is called natural language processing (NLP).

One of the challenges for AI systems in this field is learning how text is structured. By sifting through huge amounts of written text and analyzing which words appear next to each other, which appear in positive or negative contexts, which express happiness or sadness, seriousness or humor, the AI system can identify the information patterns that make up the English language.

It can then do the same for other languages and learn how to translate accurate meaning and context between them. Each word, sentence, and context makes a tiny contribution to the AI system’s ability to predict which words should follow in a sequence, and which words and phrases correctly translate each other.

Like humans, AI systems start with the basics – beginning by learning how to translate word by word. As they repeat the translation process, they gradually develop the ability to understand context, structure and meaning.

This creates a philosophical question for us to consider: can we call an AI system “intelligent”, or is it simply learning how to mimic intelligence? Does it “understand” the words it is using, or is it just repeating linguistic patterns it thinks are right for the situation?

By looking at lots and lots of text and finding out which words are used together, AI systems can build up impressive capabilities. Once a system has learned which words are used when and in which context, it can spell check your text and suggest alternative synonyms.

It can, for example, improve your professional documents with more sophisticated words, or suggest stronger ways of expressing social media posts. These AI systems can then translate that text into hundreds of different languages – and they can do it faster and more accurately than any human could.

These same AI systems are used to improve the performance of chatbots on websites, helping users to avoid long and complicated interactions with a call center. They also allow Alexa, Siri, and other digital assistants to better understand what you’re saying.

Such systems can not only translate text into standard English, they can also do it in different styles – for example, writing in the style of William Shakespeare or Jean-Jacques Rousseau.

From there it’s only a small step to creating original text from scratch. Before long, an AI system will be able to write a new Harry Potter novel, in the style of Shakespeare, and read it out loud in the voice of Barack Obama.

It is at that point we will once again have to ask ourselves deep, philosophical questions about what it means to be intelligent.

How AI learns how to see and draw.

Another important aspect of intelligence is the ability to see and recognize objects. Until very recently, computer programs found it very difficult to distinguish between images of cats and dogs. This is an incredibly simple task for humans however, even for children after seeing only a few pictures of each.

Solving this problem of visual identification is not as easy as it might seem. Firstly, we have to identify specific features which we believe will help us to distinguish a cat from a dog. We might do this by paying attention to the shape or placement of the eyes, ears, or snout. We might also consider the posture of the animal and how it sits or stands.

But then how do we translate this into a computer program? How will the program be able to locate the animal in an image and distinguish it from the background? And how will it be able to detect its eyes, ears, and snouts?

These are problems we have to solve before we can even start to apply some form of judgment that designates an image as “cat-like” or “dog-like.” Writing such a program from scratch is definitely not a straightforward exercise.

Recent advances in deep learning, one of the fields of AI research, has given AI systems the ability to perform visual identification tasks much more accurately than before. Once again, key to this breakthrough is AI’s ability to process millions of images, look for hidden information patterns, and attribute a small parameter to each of them.

For example, an AI system can learn to detect if an image contains a lot of stripes, in which case it could be looking at a tiger or a zebra. It can also look for shifts in color in an image, like how the presence of green at the bottom and blue at the top tends to depict land and sky.

The strength of deep learning approaches is that human guidance is not required because the AI system can learn for itself which patterns to look for. Given this autonomy, AI systems start to do something very interesting after only a short time.

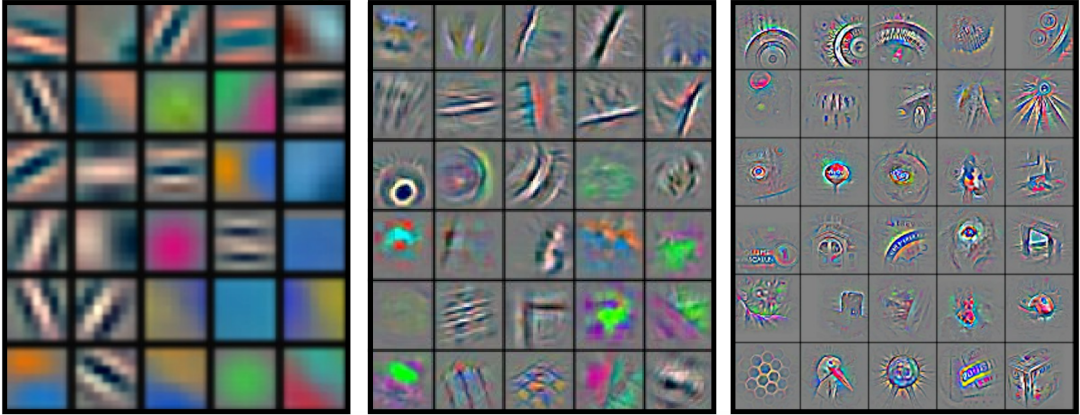

After allowing an AI to look at millions of images depicting 1,000 different objects, researchers checked to see what sort of pattern the AI was looking for. They found the following image:

This image might not mean much to us, but it explains how the AI system looks at things. It checks an image for horizontal and vertical lines, and everything in between. It looks for specific colors and how they tend to shift from one to another.

Remarkably, this is very similar to what happens in our visual cortex, the region of the brain responsible for visual perception. For decades, neuroscientists already knew that human brains have specialized regions that look for very similar-looking horizontal and vertical lines, color shifts, and more. Without telling them to do so, these AI systems are imitating natural processes to a surprising degree.

Once the AI system has detected enough patterns in different parts of the image, it learns how to combine them to recognize features such as eyes, ears, and snouts. From there it can eventually learn whether how they are presented makes the image more likely to be of a cat or a dog.

By looking at lots of images, the AI identifies the optimal internal parameters to help it make these judgments reliable. In other words, it learns – and as a result becomes able to “see”.

Computers struggled with the image recognition problem for a very long time. It wasn’t until 2012 that Google finally developed a program that could definitively say whether an image contained a cat or a dog.1 That AI system had abilities similar to a small child and was able to perform rudimentary tasks with sufficient accuracy.

From there it took only five more years for AI systems to develop the ability to create fake videos, sometimes referred to as “deepfakes”. Deepfakes are images or videos where a person’s face in an image is convincingly replaced by the face of someone else.2 Deepfakes can possess a degree of detail and perfection that most people wouldn’t be able to achieve, even with years of experience in Photoshop.

Since then, AI systems have developed the ability to detect cancer in X-rays with far greater accuracy than most professional radiologists.3 All of these different tasks are being performed with the same technological processes – looking for patterns in the images and fine-tuning the parameters to achieve a desired result.

As you might expect, the advances haven’t stopped there. More recently, AI systems have become much more effective at using the patterns they identify. Once they learned how to detect faces and features in a given photo, using that information to generate entirely new, artificial faces was only a small step away.

For an endless feed of artificially generated faces, check out thispersondoesnotexist.com/ and refresh the page as often as you like.4 As the name of the website suggests, these people do not exist – the images you are looking at are 100% machine generated.

Now that AI systems can detect a specific object in an image, they can also go a step further and create a new version of the image without the object in it. As a result, these new systems are able to remove unwanted objects from holiday or wedding photos. Bystanders, tourists, stains on your shirt, birds flying past… all can be removed at the touch of a button, leaving you with the perfect image.5

These systems will soon be able to automatically Photoshop your holiday photos, create paintings in the style of your favorite artist,6 and even create new episodes of your favorite TV show at short notice.

What will we think about the nature of intelligence, creativity and imagination when all of this is possible?

How AI learns to learn, decide, fail, and learn some more.

In the previous examples concerning reading and seeing, we’ve seen how AI systems look at vast amounts of data, identify information patterns, and then refine lots of parameters to predict an outcome.

Put simply, the AI learns what certain words and objects represent. And in short, they learn what patterns “mean”, doing so in a way that’s very similar to what humans do in the classroom. We learn what letters and objects represent, what they stand for, and what they look like, so that we can recognize them again in the future.

However, our lives aren’t structured around neat patterns, and our experiences are never repeated in exactly the same way. Most of our important insights and skills are developed outside of the classroom and in the real world. Here, what really matters isn’t what an object represents, but how we interact with it – and what the consequences of that interaction will be.

For example, what is the quickest way to work? Should you go by car or bike? What does the weather look like? If you’re already late for work, should you compromise your safety by not taking the time to put your helmet on? Is it worth the risk to save those extra 30 seconds? All of these considerations and trade-offs inform our decision making processes.

Developing AI systems that can make multiple decisions in succession is a field of AI research called reinforcement learning. These AI systems are placed in environments where they can interact with the surroundings, make decisions, and depending on the actions they take are either rewarded or punished. In other words, they learn through the reinforcement of good behavior.

Let’s consider an example. Imagine a park in the middle of the city with some trees and a bird looking for something to eat. In this scenario, the bird is the “actor” (usually referred to as the “agent”) who is trying to find the quickest way to get some food. The bird might fly around the park (the “environment”) to find food, and in doing so encounters a variety of situations.

Eventually the bird decides to land and search the ground for food, but it is attacked by a cat. It survives, but the bird learns that its decision to spend too much time on the ground led to a situation where it was nearly eaten. So in the future it decides to spend more time in the air where it is safer.

In another scenario, however, it finds a hot dog van in the corner of the park and discovers lots of crumbs on the ground beside it. In this case, it learns that spending time next to things where people gather is a good idea that brings rewards.

Reinforcement learning AI systems are developed in exactly the same way. These systems are actors in given environments and make decisions based on previous experiences. They observe outcomes and learn which actions result in rewards, and which result in punishments.

This type of AI system is quite different from the two other systems we encountered earlier. In our previous examples, the AI systems were given data to look at so they could identify information patterns. With reinforcement learning, the AI system creates its own data by being an active participant in an environment of rewards and punishments. Through trial and error, these AI systems learn to make better decisions based on their own experiences.

Reinforcement learning (RL) is still a relatively new field in AI research and there are not many systems in our everyday lives that use this approach, yet. This is because even basic RL systems need thousands of iterations of trial and error in a controlled environment to succeed. These environments take a long time to build and simulate, so progress tends to be relatively slow compared to other AI systems. Nevertheless, this field of research is very promising because it’s similar to the way humans learn to behave in environments and make decisions.

Indeed, RL technology has been responsible for some of the most dramatic breakthroughs in AI in recent times. It was an RL system that successfully learned how to play multiple Atari video games by looking at the pixels on the screen.7 Reinforcement learning was also the key technology in AlphaGo, Google’s DeepMind algorithm, that defeated the world’s leading Go player four times out of five in 2016.8

The same team behind that success then used RL to build AlphaFold, which solved a 50-year-old problem in biology in 2020.9 Reinforcement learning systems are also being widely used in robotics and automated drones, both of which provide scenarios where thousands of test trials can be conducted safely in controlled environments.

Closer to home, SBB CFF FFS recently announced the start of the Flatland challenge. This is an online competition that encourages people to use reinforcement learning to find an optimal solution for managing traffic on complex rail networks.

Summary

At present, AI systems can read and write without truly understanding what words mean; they can see and draw without really understanding what shapes and objects in an image actually represent; and they can make decisions through trial and error without the experience of actually living.

Whether or not these activities mean we can consider AI to be smart, intelligent, or even creative, is a philosophical discussion for another day. For now, we hope this article has given you an understanding of the underlying mechanics in AI systems, and how their abilities can be explained by entirely clear and rational processes.

-

For more on this, you can read Google’s blog announcing the achievment or this www.wired.com article reporting on it. ↩

-

For more about deepfakes, including an example video, please checkout the corresponding Wikipedia article. The prefix “deep” in deepfakes comes from the fact that these fake images were generated with deep learning. ↩

-

The study showing this impressive achievement was published in Nature and can be checked out here. ↩

-

The curious person might also want to check out cats, art or horses which don’t exist. ↩

-

For a video of this technology in action, checkout the developers YouTube video here. ↩

-

This technic is also referred to as “style transfer”, checkout this link to read more about this approach. ↩

-

You can find the scientific publication connected to this here. ↩

-

For more on the story how AlphaGo won against Lee Sedol, check out the corresponding Wikipedia article or the AI developers’ homepage. ↩

-

For more on the story on AlphaFold, check out the corresponding Wikipedia article or the AI developers’ homepage. ↩