We use our senses to make observations and collect information about ourselves and the world around us. We also use sensors and devices to capture information for us. These information gathering processes help us to gain knowledge and learn new skills.

We’re able to evaluate situations in front of us and use our internal logic and reasoning to make decisions. Should I turn left or right? Can I make that jump across the stream? Where is the best place to hide the children’s birthday presents?

Whenever we record information, we create data. In our article The building blocks of AI, we emphasized the importance of data in the development of artificial intelligence (AI) systems. Humans need information to learn and make decisions; AI systems need data. As Michael Dell, founder of Dell Technologies, stated: “Artificial intelligence is your rocket ship and data is the fuel.”

But what is data? And are there different types of data?

Take the website you’re currently looking at – it is data. The text you’re reading conveys information through language; the images present visual information in the form of colors and shapes; the HTML code that tells your device how to display this website is also data. It’s likely that your data provider is currently measuring its size and charging you accordingly.

Let’s take a look at the image below. It shows the sort of data that we can gather with or about everyday items. We can see their color and shape or measure their size, weight, and temperature. We can also capture aspects like language used, model type, or expiration date. These days some items even collect data for or about us all by themselves. They may capture our browsing and shopping history, store our images and playlists and track our movements and activities. As you can see, there is a large variety of information that can be gathered – and all that results in lots of data.

Data comes in many different formats: text, images, audio, etc. Each format conveys information in its own way, so we choose our formats depending on the nature of the task at hand.

If we want to identify the different Swiss mountains in our holiday photos, using image data is the obvious choice compared to verbal descriptions of the mountains. Similarly, recognizing a classical composer might be easier from an audio recording than from a sheet of music.

What we will discover.

We will look at the different types of data that exist and ask what sort of insights humans and AI might be able to gain from them. We will also look at the variety and complexity of data.

The aim is to explore how humans use these forms of data, which will help us understand what we can expect from the AI systems. What is it that we want AI to learn from these data types?

We will also introduce you to different AI applications to illustrate what has already been achieved – and what might become mainstream soon. This will further highlight why data has become such a valuable commodity these days.

As there is a wide range of data types in use, we have split this topic into two parts. In this article we will be discussing the most common data formats:

1. Tabular data

2. Text

3. Audio data

4. Visual data: images and videos

In the next article we will focus on less familiar data types:

5. Temporal data and time series

6. Networks

7. Geospatial and location data

8. Emotions

9. The internet of things

So, are you ready? Let’s jump in!

1. Tabular Data

What pops into your mind when you think about data? Most people conjure up images of spreadsheets, or lists of numbers and characteristics. Basically they are thinking about tables of data.

At school, several data tables would have been familiar to you on a daily basis. Your timetable, multiplication tables, and vocabulary lists are all examples of what we call tabular data.

| Id | Age (years) | Height (cm) | Weight (kg) | Hair color | Sporty | Sex/Gender |

|---|---|---|---|---|---|---|

| 1 | 16 | 174.3 | 58.6 | Brown | Yes | Female |

| 2 | 25 | 166 | 64.3 | Blond | No | Male |

| 3 | 2 | 88.8 | 11.9 | Black | No | Female |

| 4 | 61 | 175.8 | 72 | White | Yes | Male |

| 5 | … | … | … | … | … | … |

Spreadsheets and tables are one of the most common data formats, which is why they’re some of the first things we think about when we consider the idea of data. The key aspect of tabular data is that it is structured, both visually and conceptually.

For each item in a table we record the same characteristics or pieces of information, e.g. height, weight, eye color, etc. Each characteristic has its own vertical column and we record all the relevant information for each individual item in a horizontal row. This way of organizing the data makes it easy to analyze and understand. It also makes it easy for machines to process it.

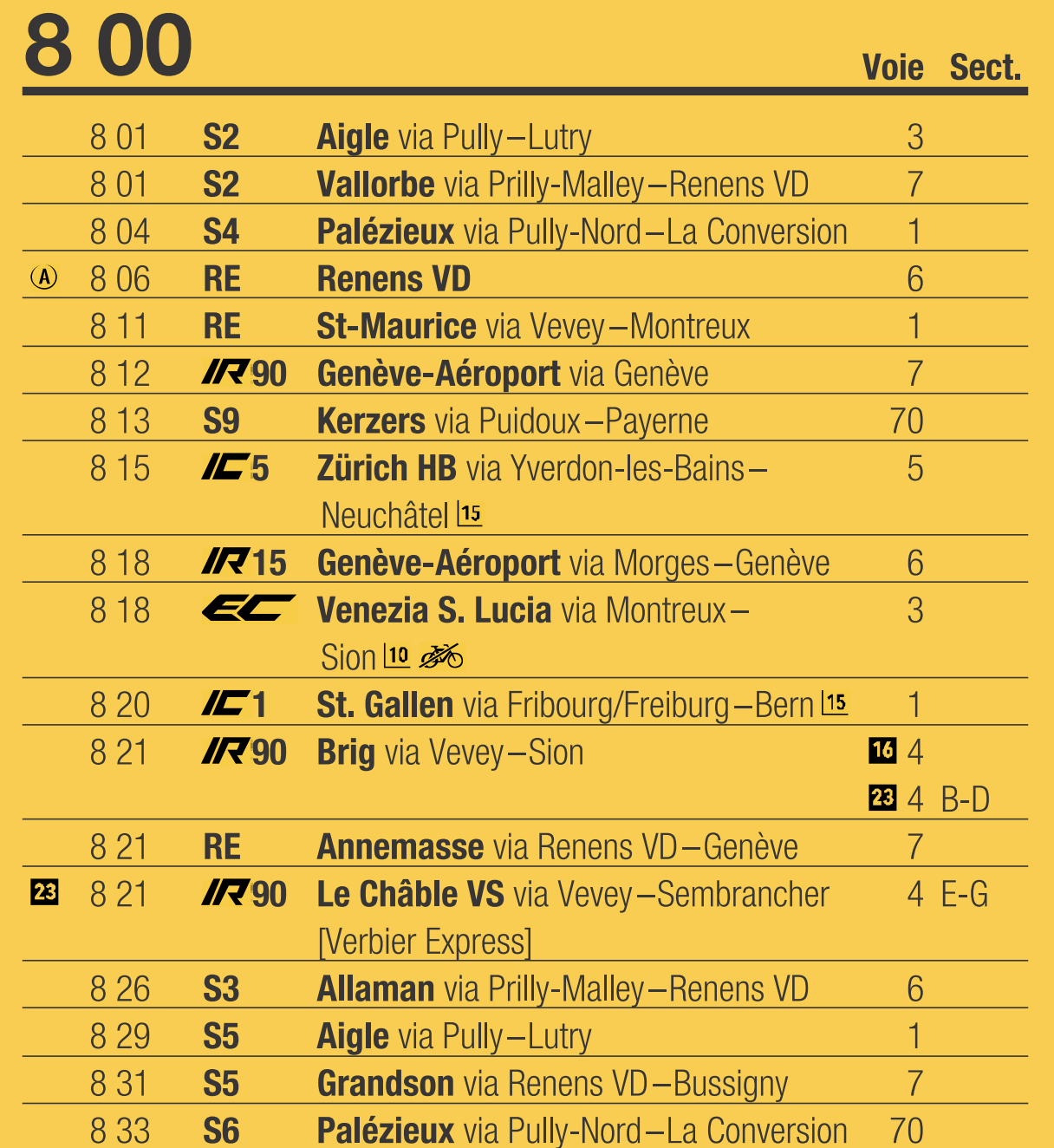

Let’s take a closer look at the train timetable below. The information for each train is given in a row, and every column captures a specific detail: departure time, train number, destination and platform. The columns make it easy for you to limit your search by different criteria, e.g. the next train for Geneva leaves at 8:08 from platform six, or the next train on platform five goes to Geneva Airport.

Humans have been recording data in this way for thousands of years and we’re still doing it today. Train timetables, financial accounts, playlists, football league tables – they all present data structured in a tabular format.

The most important aspect of tabular data for AI is a clear and consistent structure, which makes it easy to process. For this reason most AI systems transform other data types into some form of tabular or grid-like format before processing it. Sales forecasts, customer loyalties, movie recommendations, fraud detection – they can all be stored in tabular form.

2. Text

Another very common form of data is text. Human beings have used all sorts of different writing systems to record information for many thousands of years. In Ancient Egypt, writing was done on papyrus. Fast-forward a few millennia to the medieval period and we find the invention of the printing press. Today we record our writings in digital files like Word documents.

But from papyrus to PC, the principle remains the same. Throughout history we’ve used writing to make detailed recordings of every aspect of our lives and surroundings. Language is a great way of recording information because of its versatility and richness. Just think of all the ways you could use language to describe an everyday occurrence.

The Complexity of Language

Unlike tabular data, text is an unstructured way of recording information. We have words, phrases, sentences and paragraphs, but there is no consistent pattern or set of rules that tell us how information is encoded in language and where it can be found in any given text. Sometimes additional structure is provided to guide us: a contents page, or maybe an index. Newspapers make it easier for us to find what we want by grouping text according to topic, e.g. news at the front and sports at the back. But we are still left with unstructured text when it comes to reading the individual articles.

Language is a complex human construct. As children we learn to associate combinations of spoken words with meanings and concepts. We also learn nuance in the way we use language. For example, when our parents ask if we can put the milk on the table, we understand that this means placing the milk bottle on the table, and not pouring the milk directly onto it.

Language can be very precise, but all too often it is ambiguous and leaves room for interpretations. Sometimes we have to “read between the lines”. Language can be used to express opinions, doubts, feelings and emotions, and all of these things lead to subjective interpretations. Extracting all of the information from a text with complete accuracy is challenging, even for humans.

How AI works with text.

Text is the most common way of conveying information. However, the range and complexity of information contained in text, the lack of consistent structure, the dependence on context and general ambiguity when it comes to meaning, makes it very difficult for AI to interpret textual data.

Business data, medical diagnoses, emails, social media posts – they’re all conveyed in the form of text. The prevalence of text means that text mining and natural language processing (NLP) are important research fields in contemporary AI.

These technologies already assist us by proofreading our texts for spelling and grammar, and by predicting the end of our sentences as we are writing them. AI can also automatically translate our text into another language, one we may not even speak. The reliability of translations done by AI has increased hugely in recent times and mistakes are much rarer than they used to be.

AI systems are already applied in customer service. Natural language processing is used to automatically identify topics, names, and locations in written requests before forwarding them to relevant departments. Some requests are even handled by chatbots which are able to communicate with users and even fulfill the request without any human input at all.

Companies also use AI to conduct sentiment analysis on their customer reviews to understand how people feel about their products and services. Thanks to AI technologies, all of these tasks can be automated, at scale, and in real-time.

3. Audio Data

All of the problems we find with text are magnified when it comes to audio data. With audio we’re still dealing with all of the complexity and ambiguity of language, but here we also have to deal with different accents, intonations, emotions, as well as varying volume and speed of speech. Humans are able to overcome these problems by learning to recognize and distinguish people by their voice, and also to detect emotions in the way a person delivers their words.

But oral communication becomes difficult when we’re having a conversation in a noisy environment, or with someone who has a strong accent or different dialect. It becomes even harder when we’re trying to communicate in a second language. For AI to understand verbal commands or speech, or to identify a person from the sound of their voice, it needs to overcome these challenges too.

How AI works with audio data.

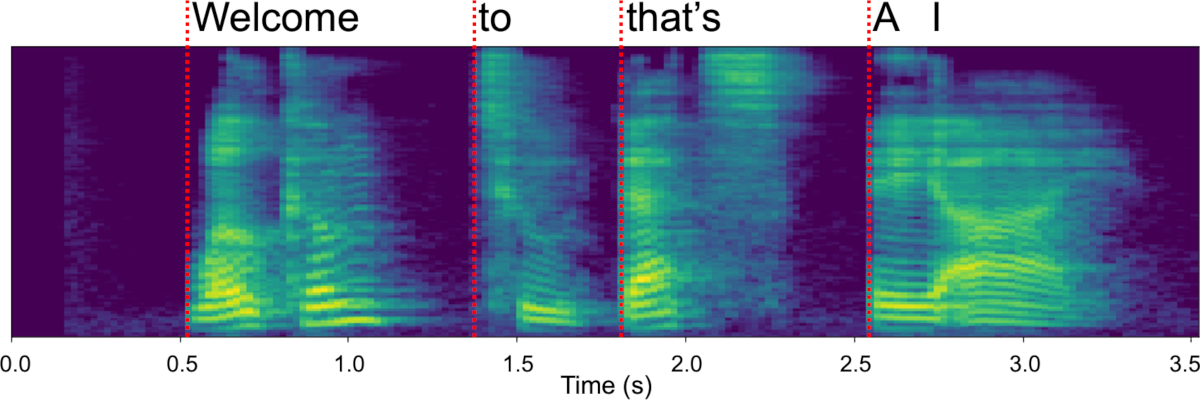

One area where AI technology is being applied to audio data is automatic speech recognition, also known as speech transcription or speech-to-text. This allows you to use your phone hands-free, and also gives people like doctors the ability to save valuable time by simply dictating their notes instead of having to write them down. Automatically generated subtitles are also a common feature on videos and TV, although you may still find occasional errors. It’s not always perfect, but it is always improving.

Speech recognition is also applied in smart digital assistants such as Alexa and Siri. They allow us to control our devices without any physical interaction, and over time they learn how to best understand our voice, answer our queries, and respond to our commands.

AI can also be used in the opposite direction by converting text into speech. This technology gives your digital assistants a voice when they respond to your queries. It also enables your web browser to read your articles aloud to you. Since 2019 it has been possible to do automated language translation directly from speech to speech, without having to pass through text along the way. Soon it may be possible to make the translation sound like you, complete with your accent.

It’s important to remember that audio data is not just limited to speech. Audio data also includes sounds like music, animal noises, jet engines and ticking clocks.

Let’s consider music for a moment. Humans can distinguish between genres when they listen to music; we can tell the difference between classical and heavy metal. We can also tell the difference between brass and wind, or between a guitar and piano. AI is capable of classifying the mood of songs and can automate the labelling of new tracks – that’s how all the music is filtered and classified on platforms such as Spotify.

There are also other things we can identify just by listening and applying our expertise. For example, an experienced car mechanic can listen to an engine and know if something is wrong with it even if there’s no visible sign of anything being broken. AI is learning to do the same by analyzing the sound profiles of mechanical components so that it can detect malfunctions inside a machine without having to open it.

4. Visual Data: Images and Videos

Vision is our most highly-developed sense and we rely on it almost completely when we’re orienting ourselves in our environments.

Human brains are able to process visual information at incredible speed and volume. At a glance we are able to identify shapes, colours, shading, and textures and then combine them to identify objects and people without even a moment’s hesitation. We’re also able to interpret the subtleties of facial expressions which can reveal a lot of information about a person’s underlying emotional state. Visual data records a vast amount and variety of information all at once. As the saying goes, a picture is worth a thousand words.

When we take a longer look, we’re able to analyze the motion of objects and people, read facial expressions and hand gestures, and identify changes. Our brains can combine all of this information to create a narrative of the events unfolding in front of us.

We can also forecast what might happen next and act accordingly. This is something we’re constantly doing while we’re driving – we take in the speed and direction of the vehicles around us to anticipate what they might do next. For example, if you think the car to the left is about to move into your lane, you slow down to avoid a potential collision. Recognition, analysis, evaluation and forecasting all happen within split seconds in our brains.

How AI works with visual data.

Visual recognition plays an important role in many AI technologies and it has a wide range of different applications across multiple industries. Let’s think briefly about text again and the process of digitizing it. Data recorded in a physical way is referred to as “analog”, and a vast amount of textual data exists either in printed or handwritten form. To make this data searchable it needs to be digitized.

Consider how long it would take humans to manually input all of the world’s analog texts into a machine. Fortunately, we can automate this process with an AI technology called Optical Character Recognition (OCR) – or machine reading – which is much faster and far less error-prone than human transcription.

This technology is used when you scan receipts or invoices with a payment app, when you search for words in scanned documents, and for automatically entering information from forms into databases. It also plays an important role in supply chains and logistics by reading shipping and customs documentation, identifying cargo, and tracking goods. The same technology is used to read out texts for people who are blind or partially sighted.

These achievements are impressive enough, but computer vision has already moved beyond simple machine reading. Your smartphone is able to recognize your face or fingerprint so only you can gain access to it. Your photo library can automatically group all of your photos according to the people in them.

The technology that makes this possible is also able to recognize objects in images and then label and categorize them. Self-driving cars make use of the same technology to read road signs, monitor other vehicles, and identify potential obstacles and pedestrians to plan their next move. Robots use this technology to identify objects and their precise location so that they can pick them up and manipulate them.

The use for computer vision.

Existing and potential use cases for computer vision are incredibly varied. Factories use it to automatically detect faulty or damaged goods and remove them from the production line. Organizations like insurance companies and government agencies apply computer vision to aerial and satellite data to evaluate the extent of natural disasters or identify suspicious military activity. Security firms can use it to identify intruders in restricted areas and incidents in remote storage locations.

Computer vision also has clear applications for widespread surveillance. Farmers make use of it by equipping drones with computer vision technology that can assess their various crops. As a result, these farmers are able to see which crops are ready for harvest and which need more water without even going out into the fields. In medicine, computer vision can check the skin for eczema and melanoma, and it can read X-rays with greater accuracy than senior radiographers.

All of these use cases concern recognition, the process of being able to see things and identify them. But we must also consider the field of visual augmentation which involves the manipulation of video. You may already be familiar with video conferencing tools and messaging apps that allow you to turn your office background into an exotic location, or to apply fake eyelashes and beards to your face.

It’s even possible to replace your body with something called an avatar, which is an artificial graphical representation. To do this, AI uses motion tracking to determine the position and orientation of your body before calculating what it thinks will be the most realistic alliteration of the images.

Augmented reality can also insert artificial objects into your real-life environment. Some board games already have augmented reality features that pop up on the board to provide a more interactive gaming experience. In medicine, brain surgeons use augmented reality to visualise scans of the brain ahead of surgery, explore sensitive areas, and plan the operation so that it is minimally invasive. Once in the operating room, they can overlay the patient with the scan and additional information to guide their instruments and increase accuracy.

Automatic motion tracking also has uses beyond visual augmentation. For self-driving cars it’s crucial to be able to distinguish between a pedestrian who is safely on the sidewalk and one who intends to immediately step out into the road. In this case, AI will observe the speed and direction of the motion to predict whether there is a possibility for a collision.

Motion tracking can also be used to control machines and interfaces without touch, solely by gestures, and it is used in sports1 where it tracks players and the ball. Traffic lights have also become more effective thanks to motion tracking monitoring traffic flow.

Even supermarkets make use of motion tracking by looking at the routes shoppers take as they make their way around the store. This data is then used to make planning and layout decisions to channel the flow of customers in certain directions. In fact, motion tracking in the local supermarket may soon be able to calculate the cost of your basket by simply identifying the products you picked up from the shelf.

Intermediate Summary

For now, we looked at some of the most common data types, the ones we encounter regularly in our daily life: tables, text, sound, images and videos. As we have seen each one can be used to provide us with unique insights and help us build powerful AI systems. In the next article, we will encounter data types that you might not immediately consider as data - and yet they are. Because as you will see: Everything is data!

-

You can find out more in our article AI in Sports ↩